EU AI Act: Certification Rules by Industry

EU AI Act: Certification Rules by Industry

The EU AI Act is shaking things up for anyone working with artificial intelligence in Europe. It’s all about making AI safer, more transparent, and respecting people’s rights. Here's the deal:

-

Four Risk Levels: AI systems are grouped into categories based on risk:

- Unacceptable Risk (e.g., social scoring): Banned outright.

- High Risk (e.g., healthcare, finance, automotive): Strict rules and certifications.

- Limited Risk (e.g., chatbots): Must be transparent about being AI.

- Minimal Risk (e.g., spam filters): Hardly any rules.

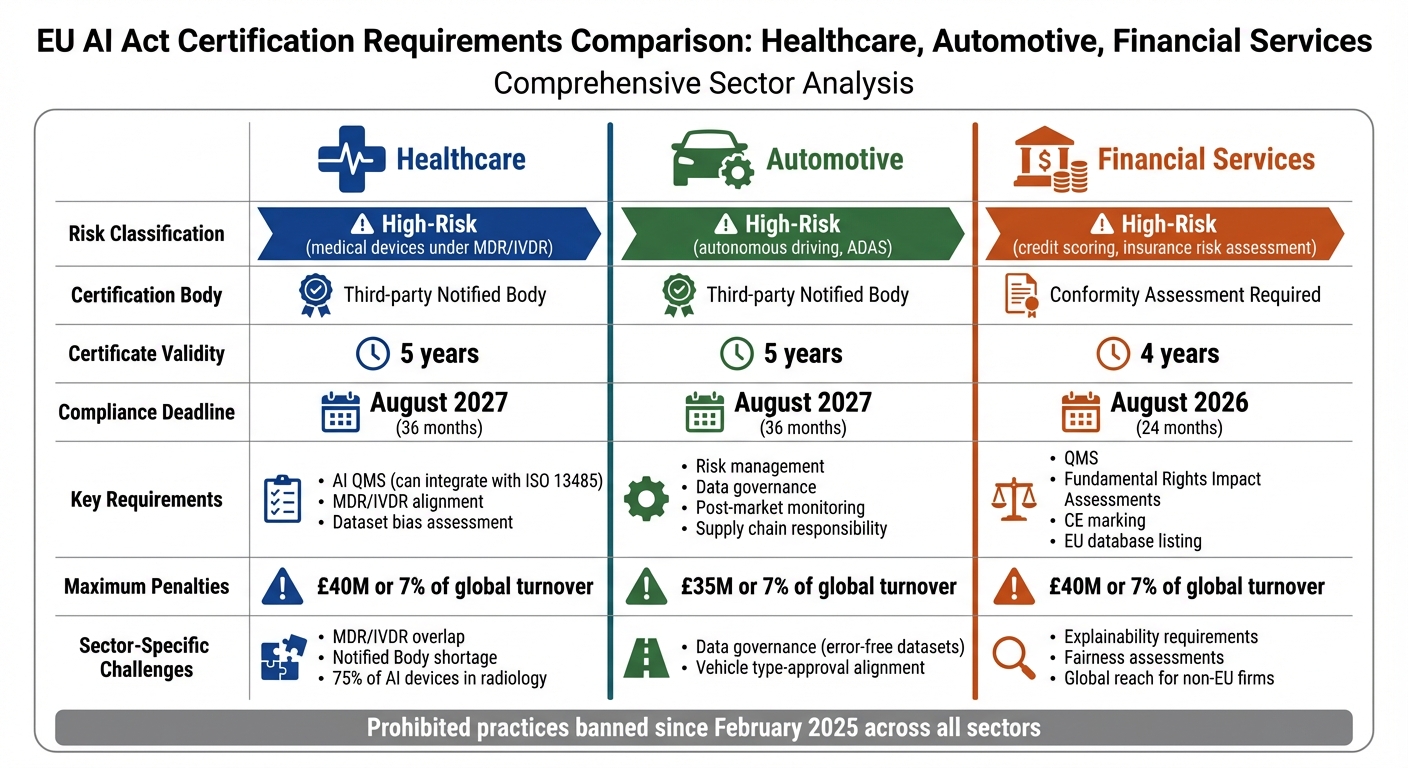

- High-Risk Systems: These need certifications with detailed checks on risk management, data quality, and human oversight. Certification lasts 4-5 years, depending on the sector.

-

Sector-Specific Deadlines:

- Healthcare and automotive have 36 months (till August 2027) to comply.

- Financial services face tighter timelines, with a 24-month window ending August 2026.

- Challenges: Overlapping regulations in healthcare, supply chain issues in automotive, and fairness concerns in finance make compliance tricky. Costs are high, especially for SMEs, and fines for non-compliance can hit £40M or 7% of global turnover.

The Act is complex but manageable with the right approach. Whether you’re in healthcare, automotive, or finance, understanding your obligations and planning early is key. Don’t wait - figure out where your AI system fits and get started on compliance now.

EU AI Act Certification Requirements by Industry: Healthcare, Automotive, and Financial Services

1. Healthcare

Certification Requirements

Healthcare AI systems classified as high-risk are subject to rigorous certification under the EU AI Act. If the AI is used as a safety component in medical devices, or qualifies as a medical product under the Medical Device Regulation (MDR) or In Vitro Diagnostic Regulation (IVDR), it must undergo a third-party conformity assessment by a Notified Body before it can hit the market [3][4].

Under Article 17, providers are required to implement an AI Quality Management System (AI QMS). The good news? This can be integrated into existing frameworks like ISO 13485, so there’s no need to reinvent the wheel [4]. Documentation, as outlined in Annex IV, must cover everything from system architecture to design, training, and performance. Article 11 streamlines things by allowing manufacturers to combine AI Act requirements with MDR/IVDR into a single technical file, cutting down on unnecessary admin [4].

Another key requirement is the assessment of training, validation, and testing datasets. These must be checked thoroughly for bias and to ensure they’re suitable for the context in which they’ll be used [4]. Certificates issued by Notified Bodies for medical AI systems are valid for up to five years, giving manufacturers some breathing room [2].

Compliance Timelines

Healthcare organisations have a 36-month transition period to align their AI systems with Annex II of the AI Act [4]. This extended timeline reflects the complexity of coordinating the AI Act with existing medical device regulations. However, the prohibitions on unacceptable AI practices came into effect much sooner - January 2025, just six months after the Act was enforced [3][4]. These staggered timelines highlight the challenges of ensuring compliance in such a complex sector.

Sector-Specific Challenges

The healthcare sector faces several hurdles in meeting these certification standards. The overlap between the AI Act and MDR/IVDR often results in duplicated efforts for risk management and documentation [3][4]. As an analysis published in Nature pointed out:

"The increased requirements resulting from the MDR are so extensive that it is considered to be an existential threat." [4]

On top of that, a shortage of Notified Bodies could delay AI system certifications, echoing the bottlenecks seen during MDR implementation [4]. This is particularly concerning given that around 75% of commercial AI-enabled devices are in radiology - most of which are classified as high-risk under the AI Act [3].

Small and medium-sized enterprises (SMEs) are hit hardest by the compliance costs and complexity. Many may have to withdraw products or scale back their offerings. And if that wasn’t enough, integrating General-Purpose AI models (like Large Language Models for clinical reasoning) adds another layer of difficulty. These models fall under the jurisdiction of the EU AI Office, while medical devices are regulated by national authorities, creating a fragmented regulatory landscape [3][4].

2. Automotive

Certification Requirements

When it comes to automotive AI, particularly for autonomous driving and Advanced Driver Assistance Systems (ADAS), the stakes are incredibly high. These systems are classified as safety-critical components under EU type-approval regulations [5]. To meet compliance, manufacturers must undergo a thorough conformity assessment. This process ensures they meet stringent requirements around risk management, data governance, technical documentation, and human oversight [3,10].

Interestingly, the certification process isn't something you can handle in-house. It requires a third-party assessment by a notified body [16,17]. Once certified, the certificate is valid for five years, after which manufacturers must go through a re-assessment [2]. Additionally, companies are required to establish a post-market monitoring system. This system continuously gathers and reviews performance data throughout the AI system's operational life [2].

Another layer of complexity lies in determining responsibility across the supply chain. For example, an EU-based manufacturer (OEM) integrating an AI system into a vehicle is typically seen as the "provider." On the other hand, a non-EU developer might be classified as a "supplier" unless they market the system under their own trademark [5]. For EU-based importers of automotive AI components, there's an obligation to ensure that non-EU providers have appointed an authorised representative and can provide evidence of compliance [5]. Navigating these roles and responsibilities is no small feat, especially with the strict timelines and challenges that come with compliance.

Compliance Timelines

The clock is ticking for automotive companies, as they must comply with the regulations by 2 August 2027. This gives them a 36-month window from the Act’s official start [16,17]. The extended timeline reflects the difficulty of merging AI-specific regulations with the traditional vehicle type-approval processes outlined in Regulation (EU) 2018/858 [15,16].

However, some rules are already in effect. For instance, prohibitions like restrictions on emotion recognition and social scoring have been enforceable since February 2025. Automotive firms need to ensure they’re already adhering to these specific restrictions where applicable [5].

Sector-Specific Challenges

Aligning the AI Act’s requirements with existing vehicle safety legislation is no small task for the automotive sector [15,16]. A major hurdle is data governance. The regulations require datasets to be error-free and complete [7], which is challenging given the complexities of real-world driving scenarios.

Non-compliance comes with heavy financial consequences. Failing to meet data governance or transparency requirements could lead to fines of up to £20 million or 4% of global annual turnover [16,11]. Even more severe penalties apply to breaches of prohibited practices, with fines reaching £35 million or 7% of total worldwide annual turnover [9,11]. These figures underscore just how critical it is for automotive firms to get it right. There’s no room for shortcuts when safety, compliance, and significant financial risks are on the line.

3. Financial Services

Certification Requirements

When it comes to the EU AI Act, financial services are under some of the toughest certification rules. AI tools used for credit scoring, assessing creditworthiness, or determining risks and pricing for life and health insurance are automatically labelled as high-risk under Annex III [1]. Interestingly, AI systems designed to detect financial fraud are not classified as high-risk [1].

For high-risk systems, a formal conformity assessment is mandatory. This process checks for compliance in areas like risk management, data governance, technical documentation, and human oversight [1][9]. Providers are also required to implement a Quality Management System (QMS) to ensure ongoing adherence to these standards [1]. Once a system meets all the criteria, it must carry the CE marking and be listed in a centralised EU database managed by the European Commission [1].

Certification for financial AI systems is valid for four years. After that, a re-assessment is needed to extend the certification for another four years [2]. This period is shorter than the five-year validity granted to automotive systems under Annex I.

Compliance Timelines

The clock is ticking for high-risk AI systems in financial services. These systems must meet core compliance obligations by 2 August 2026, giving financial institutions a 24-month window from the Act’s enforcement date [1][9]. This is notably tighter than the 36-month timeline given to automotive manufacturers.

Some rules are already in play. For instance, private actors, including financial institutions, have been banned from engaging in social scoring since February 2025 [8][9]. Additionally, by 2 February 2026, guidelines for high-risk AI systems will be available, outlining compliance pathways [9].

Sector-Specific Challenges

Just like the healthcare and automotive industries, financial services must grapple with ensuring their AI systems are explainable and fair. As noted by Mario F. Ayoub, Jessica L. Copeland, and Sarah Jiva from Bond Schoeneck & King PLLC:

"U.S. financial institutions must ensure their models are explainable, fair and secure. Algorithmic impact assessments and transparency measures will be essential" [9].

This is no easy task, especially for organisations using advanced machine learning models.

Failing to comply with the Act can result in steep penalties. Fines can go up to £20 million (or 4% of global turnover) for breaches related to data or transparency, and up to £40 million (or 7% of global turnover) for prohibited practices [6].

The Act’s reach isn’t limited to the EU. Non-EU financial institutions must also comply if their AI systems or outputs are used within the EU [9]. For U.S.-based banks, insurers, and fintech firms, this means the rules apply if they serve European customers or have operations in the EU. It’s a stark reminder of how wide-reaching the Act’s influence is across industries.

sbb-itb-fe42743

Navigating the EU AI Act & MDR Certification: deepeye Medical’s Success Story

Advantages and Disadvantages by Sector

The EU AI Act introduces different certification cycles depending on the sector: five years for healthcare and automotive systems (Annex I) and four years for financial services (Annex III) [2]. These timelines highlight the varying operational demands across industries.

One universal benefit is the presumption of conformity [11]. By adopting harmonised standards, organisations can simplify certification processes and minimise uncertainty [11]. However, high-risk AI systems come with stringent requirements for accuracy, robustness, and cybersecurity. This includes ensuring protection against threats like data poisoning and model evasion [10].

Sector-Specific Compliance Challenges

Each sector faces unique hurdles when it comes to compliance. For instance, in healthcare, companies must navigate the overlapping demands of the MDR/IVDR regulations and the AI Act, effectively doubling their certification workload [13]. Yet, embedding these legal requirements into product design can turn compliance into a strategic edge [12].

Financial services, on the other hand, deal with intricate fairness assessments. Thankfully, this sector benefits from an already well-established compliance culture. For example, banks using fairness-aware AI systems have managed to reduce approval disparities between demographic groups to under 3%, all while maintaining accurate risk predictions [12].

Cost and Operational Impacts

The Act brings significant operational costs, which vary by industry. In healthcare and automotive sectors, companies face hefty expenses due to fees for notified bodies, rigorous technical testing, and frequent re-assessments. Financial services, while not exempt, usually encounter moderate-to-high costs, primarily driven by documentation and ongoing monitoring requirements. Non-compliance carries steep penalties, with fines reaching up to £40 million or 7% of global turnover for prohibited practices, and up to £20 million or 4% for data governance violations [6].

Balancing Costs and Innovation

The Act’s impact on innovation also differs across sectors. Automotive manufacturers have raised concerns that the extensive documentation requirements could delay advancements in collision avoidance technology [14]. On the flip side, AI systems created purely for scientific research and development are exempt, which might safeguard early-stage innovation in fields like life sciences [13].

Furthermore, notified bodies hold the power to suspend or revoke certifications if a system no longer complies with the requirements. Providers must act quickly to address any issues to retain certification [2].

Each sector faces its own set of challenges and opportunities under the EU AI Act, from balancing costs and compliance to navigating innovation pressures. The way these industries adapt will play a critical role in shaping their future under this regulatory framework.

Conclusion

Healthcare and automotive systems are classified under Article 6 as safety components of existing products. This means they require assessments by third-party Notified Bodies and come with five-year certificates. On the other hand, financial services AI - used in areas like credit scoring and insurance risk assessment - falls under Annex III as a standalone high-risk system. These systems need four-year certificates and must include Fundamental Rights Impact Assessments [15][16]. These classifications highlight the varied compliance requirements across industries, emphasising the need for a clear and streamlined approach.

To ensure proper compliance, it's crucial to map your system to its correct classification. The sector-specific frameworks discussed earlier provide the necessary guidance. For instance, healthcare providers can integrate AI Act compliance into their existing ISO 13485 processes. Meanwhile, financial institutions should focus on implementing Fundamental Rights Impact Assessments [15][16]. High-risk systems embedded in products must meet compliance deadlines as outlined.

A key factor to understand is the distinction between being a provider and a deployer. This difference determines the scope of responsibility, the level of conformity assessment required, and potential liability [16]. If you significantly modify a third-party model, you transition from being a deployer to a provider, which brings full certification responsibilities [16].

To meet Article 12's record-keeping requirements, implementing automated logging systems is essential. Additionally, a robust Quality Management System (QMS) should be in place, covering both sector-specific regulations and AI Act standards [15][16]. For healthcare providers, Alison Dennis from Taylor Wessing explains:

"The AI Act specifically permits providers/manufacturers to combine the QMS for AI Act compliance with the QMS for their medical device" [15].

Navigating overlapping regulatory demands requires strong technical leadership. Clear guidance is what transforms complex regulatory obligations into actionable steps. Whether it's preparing Model Evidence Packs for financial compliance or managing Notified Body assessments for medical devices, experienced technical direction ensures obligations are met without stifling innovation.

FAQs

What challenges do SMEs face in meeting the EU AI Act requirements?

SMEs often find themselves wrestling with the sheer complexity of the EU AI Act’s certification and compliance requirements. For smaller organisations, limited resources and expertise can make this process feel like climbing a mountain. Take high-risk AI systems, for instance - they come with hefty demands, like preparing detailed technical documentation, managing risks thoroughly, and maintaining strict data governance. All of this can drain time and money, which are often in short supply for SMEs.

On top of that, meeting transparency obligations, ensuring human oversight, implementing cybersecurity measures, and setting up ongoing monitoring can be a real headache. These steps are non-negotiable to stay compliant and dodge penalties, but they’re no small feat - especially for businesses that don’t have a dedicated compliance team in place.

As regulations continue to shift, keeping up with the changes and weaving compliance into everyday operations can feel like an uphill battle. That’s why tailored advice and support that’s specific to their industry can make a world of difference, helping SMEs tackle these challenges without feeling swamped.

What are the implications of the EU AI Act for AI systems in financial services?

The EU AI Act sets tough standards for high-risk AI systems, especially in areas like financial services. These systems are required to go through conformity assessments, maintain comprehensive technical documentation, and establish continuous monitoring processes. The goal? To ensure these systems stay transparent, safe, and compliant.

For financial services, AI tools such as credit scoring or fraud detection must meet these rigorous standards to reduce risks and safeguard users. Organisations using such AI systems should start gearing up now to align with these rules. Doing so not only avoids penalties but also keeps operations running smoothly.

What happens if automotive companies fail to comply with the EU AI Act?

Failure to stick to the EU AI Act can lead to some serious consequences for automotive companies. We're talking about hefty fines, potential restrictions on entering the EU market, and mandatory conformity checks to prove compliance. If you're using high-risk AI systems without the proper certification, you could also face extra penalties or limits on rolling out your technology.

But it's not just about the fines and restrictions. Ignoring compliance could take a toll on your reputation, slow down innovation, and delay the launch of AI-powered solutions. For automotive companies, staying on top of these regulations isn't just a legal necessity - it’s key to keeping your competitive edge in the EU.