Emerging AI Certification Standards in Europe

Emerging AI Certification Standards in Europe

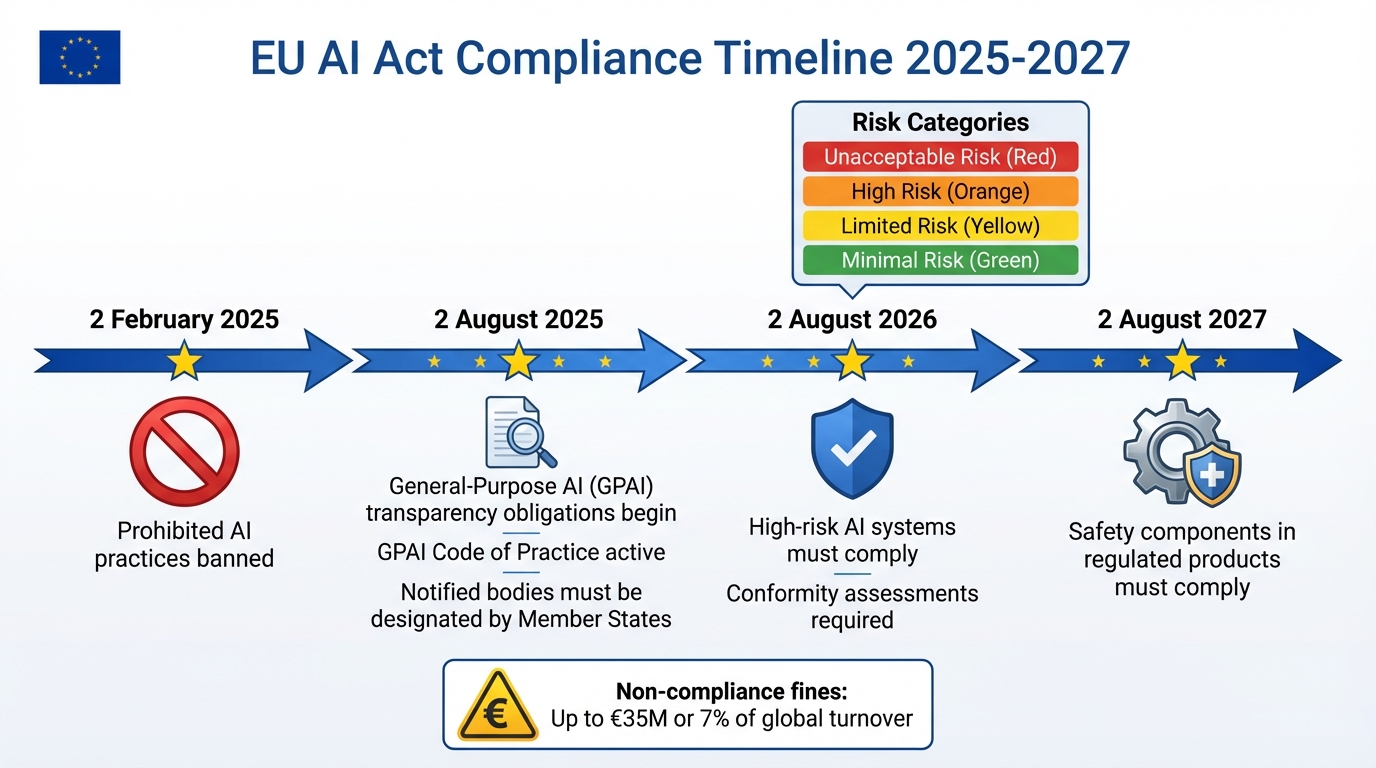

Europe’s AI regulations are getting serious, and if you’re in the game, you need to keep up. The EU AI Act, officially active since 2024, is reshaping how businesses develop and deploy AI systems. It’s not just about guidelines anymore - this is about mandatory certification, strict risk-based rules, and hefty fines (we’re talking up to €35 million or 7% of global turnover). The deadlines are rolling in fast, with obligations already in place for general-purpose AI (GPAI) as of August 2025, and high-risk systems facing compliance checks by 2026-2027.

Here’s the deal: AI systems are now grouped into four risk levels - unacceptable, high, limited, and minimal. High-risk systems (think recruitment tools, credit scoring, or anything safety-critical) need a conformity assessment before hitting the EU market. Even general-purpose AI models must meet transparency, safety, and copyright standards. And for UK businesses? If you’re selling to the EU, these rules apply to you too.

The process might sound daunting, but it’s manageable with a clear plan. Start by categorising your AI systems, audit your compliance gaps, and build a solid documentation process. Notified bodies, appointed by EU Member States, will handle the assessments for high-risk systems. For GPAI, voluntary adherence to the General-Purpose AI Code of Practice (active since August 2025) can simplify compliance.

Early preparation is key. Map your risks, organise your technical files, and align with emerging standards like ISO/IEC 42001. Need help? Bringing in experts or working with partners experienced in EU certification can save you a lot of headaches - and money. The clock’s ticking, so don’t wait until the last minute to get your systems in order.

EU AI Act Compliance Timeline and Deadlines 2025-2027

EU AI Act: Everything You Need to Be Compliant

Regulatory Framework: EU AI Certification Requirements

The EU's regulatory framework for AI certification is built around a structured compliance process, with the EU AI Act at its core. This legislation takes a risk-based approach to pinpoint AI systems that require formal evaluation. For instance, high-risk AI systems - such as those used in biometrics, critical infrastructure, or education - must undergo conformity assessments before they can be introduced to the market. These assessments ensure the systems meet standards in areas like risk management, technical documentation, transparency, human oversight, and cybersecurity[2][7].

The deadlines are clear: high-risk systems must comply by 2 August 2026, while safety components integrated into regulated products have until 2 August 2027 to meet requirements[2]. For General Purpose AI (GPAI) models that pose systemic risks, the obligations are even stricter. These include in-depth risk evaluations, enhanced cybersecurity protocols, and mandatory incident reporting[1].

Failing to comply comes with serious consequences. Companies could face fines of up to €35 million or 7% of their global annual turnover[2]. This makes proper certification not just a legal necessity but a critical financial consideration for any business planning to operate in or sell to the EU.

What Notified Bodies Do

Notified bodies play a pivotal role in this process. These are independent organisations appointed by EU Member States to carry out conformity assessments for high-risk AI systems. Their job is to ensure that an AI system aligns with the EU AI Act's requirements before it hits the market. Each Member State must create national authorities and central contact points to help businesses access these bodies for assessments[7].

The responsibilities of notified bodies are extensive. They review technical documentation, assess risk management procedures, scrutinise data governance practices, and issue EU-type examination certificates once a system is approved. For businesses, this means preparing comprehensive records, including details about model architecture, training data, bias mitigation methods, and cybersecurity measures, before approaching a notified body for evaluation[7].

Oversight of this framework is provided by the EU AI Office, supported by an AI Board, an advisory forum, and a panel of independent experts. These groups ensure consistency in how the framework is applied across all Member States[7].

How the EU AI Act Connects with Other Regulations

The EU AI Act doesn't exist in a vacuum - it works in tandem with other regulations like the Digital Services Act (DSA) and the Data Governance Act (DGA). The DSA focuses on holding online platforms accountable and managing content moderation, while the DGA establishes rules for data sharing. Together, they create a unified digital environment that ties AI safety to data rights and platform responsibilities[1][2].

For example, transparency obligations under the GPAI Code of Practice - officially approved on 1 August 2025 - align with the DSA by clarifying how AI models generate content. This harmonisation across regulations helps businesses demonstrate compliance more easily, cutting down on duplicate evaluations. The GPAI Code of Practice, published on 10 July 2025, is a voluntary tool that model providers can use to show adherence to Articles 53 and 55, which cover transparency, copyright, safety, and security[4][5][8]. Companies that adopt this code can use it as proof of compliance, simplifying the certification process across multiple regulatory areas. This interconnected system offers businesses a clear path for developing their AI certification strategies.

Current AI Certification Schemes and Standards

The EU AI Act lays the groundwork for how AI systems are regulated, but certification schemes take it a step further by defining compliance through specific assessments and standards. These schemes include mandatory checks for high-risk AI, voluntary assurance for general-purpose AI models, and harmonised technical standards to streamline compliance. The key is understanding which scheme applies to your system - this determines how you meet your obligations. Let’s break down the main types of certification and what they mean in practice.

Conformity Assessment for High-Risk AI Systems

If your AI system is considered high-risk - as outlined in Annex III of the EU AI Act - you’ll need to complete a conformity assessment before it can be introduced to the EU market. High-risk systems include tools used in areas like recruitment, credit scoring, critical infrastructure, or medical devices.

To get started, you’ll need to prepare a technical file. This file should include details about your system’s architecture, its intended use, datasets, performance metrics, limitations, and risk management records. You’ll also need evidence of testing to meet the Act’s requirements.

There are two paths for assessment:

- Internal Assessment: If your system complies with harmonised standards and isn’t a safety component under other EU laws, you can handle the assessment internally.

- Notified Body Audit: If internal assessment doesn’t apply, you’ll need to submit your system to an independent notified body for certification.

From 2 August 2025, all EU Member States are required to have designated notified bodies ready to carry out these assessments [3]. Once certified, you’ll issue an EU declaration of conformity and affix the CE mark, allowing your system to be marketed within the EU.

Code of Practice for General-Purpose AI

For AI models designed for broad, multi-purpose applications, compliance revolves around transparency, documentation, risk management, copyright safeguards, and incident reporting. Instead of a CE mark, providers demonstrate their compliance through governance disclosures.

The General-Purpose AI Code of Practice, approved on 1 August 2025, is a voluntary tool endorsed by the European Commission. Providers who sign up and follow the Code can use their adherence as evidence of compliance with Articles 53 and 55 of the AI Act. Regulators are also likely to use this Code as a benchmark when evaluating whether providers have taken appropriate steps.

The Code covers a range of important areas, including:

- Model governance and safety evaluations

- Testing for adversarial attacks and misuse

- Red-teaming exercises

- Content labelling and transparency disclosures

- Collaboration with downstream users and relevant authorities

Signing up early to this Code sends a strong message about your commitment to safety and responsible AI deployment.

Harmonised Standards and Technical Specifications

The European Commission is working with standardisation bodies like CEN/CENELEC and ETSI to create harmonised standards that provide a presumption of conformity with the AI Act. Once these standards are published in the Official Journal, businesses can use them to simplify compliance processes [9].

Internationally, standards such as ISO/IEC 42001 offer structured governance frameworks that make conformity assessments and audits more straightforward. For businesses, aligning their AI development and governance with these standards ensures smoother audits and consistent documentation.

For UK companies aiming to work within the EU market, adopting these standards can serve as a bridge when collaborating with EU-based partners or auditors. Many UK firms appoint an AI governance lead or work with specialised partners like Metamindz to design efficient, standards-aligned architectures. By building compliance into the development process from the start, businesses avoid the costly headache of retrofitting their systems later on.

sbb-itb-fe42743

How to Achieve AI Certification: Practical Steps

Getting certified isn’t just about ticking boxes - it’s about weaving compliance into the fabric of your AI systems right from the start. The EU AI Act lays out strict deadlines, and scrambling at the eleventh hour could lead to rushed audits, incomplete documentation, and hefty fines of up to €35 million or 7% of your global turnover. But with a clear plan, thorough documentation, and the right expertise, certification becomes a manageable task. Here's a practical guide to help you navigate the process.

Building Your AI Certification Strategy

The first step is to categorise your AI systems based on the EU’s risk groups. The Act breaks AI into four categories: prohibited practices, high-risk systems (like recruitment tools or credit scoring), general-purpose AI (GPAI) models with systemic risk (those exceeding specific computational thresholds), and standard GPAI systems. Each category comes with unique requirements. For instance, prohibited practices are banned starting 2 February 2025, GPAI transparency rules kick in from 2 August 2025, and high-risk system obligations take effect by 2 August 2026 [2].

Next, conduct an internal audit of your AI systems. Examine each use case - does it involve biometric categorisation, emotion recognition, or critical infrastructure? If yes, it’s likely high-risk. For GPAI providers, check if your model exceeds the computational threshold, which would require enhanced risk assessments [1][2]. Once you’ve classified your systems, map out a compliance roadmap with clear milestones. Focus on documenting training data summaries, model architecture, and risk assessments. Assign responsibilities - appoint an AI officer or Data Protection Officer (DPO) and allocate funds for notified body assessments [2][7]. This structured approach reduces both regulatory and financial risks.

Preparing Required Documentation and Governance

After laying out your strategy, the next step is creating solid documentation and governance practices. Documentation is the backbone of certification. You’ll need a comprehensive technical file that includes system architecture, training data summaries, bias mitigation strategies, and incident protocols. Pair this with a Data Protection Impact Assessment (DPIA) that aligns with GDPR [1][6]. For GPAI systems, don’t forget to include copyright compliance policies and transparency disclosures for downstream users. The European Commission’s GPAI disclosure guidance, published on 18 July 2025, provides templates to structure these submissions [5][6].

Let’s say you’re working on a high-risk AI hiring tool. Your risk register might look like this: identify risks (e.g., bias in CV screening), list mitigation steps (like adversarial testing and diverse training data), assign a residual risk score (e.g., low after mitigation), and name the team member responsible for monitoring it. Model descriptions should cover the architecture (e.g., transformer-based), intended use (e.g., GPAI for text generation), parameters, and safety evaluations, such as safeguards against personal data leaks [1][6].

Good governance is equally critical. Start role-specific AI literacy training by February 2025 [2]. Maintain risk registers that track systemic risks, implement adversarial testing protocols, and set up incident response plans for major events. Regular audits aligned with the GPAI Code of Practice, approved on 1 August 2025, are essential. Cybersecurity measures like protecting model weights should also be part of your governance framework [1][2][8]. Appointing a DPO ensures accountability and keeps your governance aligned with both the AI Act and GDPR. Strong governance not only reduces compliance risk but also ensures you’re audit-ready.

Working with Technical Experts and Notified Bodies

Once your documentation is sorted, the final step is working with experts to streamline certification. For high-risk systems, this means engaging a notified body - an independent organisation designated by EU Member States to conduct third-party conformity assessments. These bodies will verify compliance with harmonised standards and, from August 2026, oversee safety component assessments. Make sure your documentation is thorough and easy to access to avoid delays that could impact your market entry [7].

For businesses in the UK and Europe, collaborating with technical experts or fractional CTOs can simplify this process. Companies like Metamindz offer hands-on support, from architecture guidance and code reviews to risk assessments and due diligence reports. They can even help with scalability, security, and debugging. Their UK- and Europe-based teams integrate seamlessly into your workflow - whether through shared Slack channels, weekly check-ins, or daily updates - to prepare documentation, run adversarial tests, and coordinate with notified bodies. Typically, the process kicks off with a session to map your AI stack against EU risk categories, ensuring you’re clear on the requirements [1][2].

"I would highly recommend Lev as a fractional CTO. What truly sets him apart is his rare combination of deep technical expertise and business acumen, coupled with a genuine investment in seeing others succeed. His ability to assess technical challenges and architect solutions while considering the broader business context has been invaluable." - Tanya Mulesa, Founder, Aeva Health [12]

Bringing in experts early can save you from costly retrofits later. By embedding compliance into your development process from day one, you’ll save time, minimise risk, and set yourself up for smoother audits and quicker market access.

Conclusion: Preparing for AI Certification Requirements

The EU AI Act is officially in play, with deadlines stretching from 2 February 2025 to August 2027. For businesses in the UK and Europe, this means AI certification and conformity assessments are no longer optional for high-risk and general-purpose AI systems. Falling short of compliance could lead to fines as steep as €35 million or 7% of global annual turnover [2][10]. This isn’t just a technical box-ticking exercise - it’s a major boardroom issue.

Starting early can save you a lot of headaches. By getting ahead now, you’ll have time to map out your AI portfolio against the EU’s risk categories, tweak system architectures if needed, and establish solid governance without throwing your product timelines off track [2][10]. Building certification requirements into your design and procurement processes from day one will also help you avoid costly adjustments down the line [1][6]. For UK companies working with EU clients, early compliance ensures your AI products stay eligible for EU tenders and collaborations once enforcement tightens [10].

Here’s what you can do now: take stock of your AI systems, categorise them according to the Act’s risk levels, check for documentation gaps, and assign clear responsibilities across your teams [2][6][10]. Aligning with frameworks like the GPAI Code of Practice and standards such as ISO/IEC 42001 will make your interactions with notified bodies much smoother. Having your risk assessments, technical documentation, and testing results already organised in the expected format will save you a ton of time later [4][5][9][11]. These steps are the groundwork for a less stressful certification process.

If you want to speed things up, combining strong internal governance with external expertise can make a big difference. Bringing in technical specialists early can help ensure compliance and market readiness. A CTO-led partner like Metamindz, for instance, can evaluate your AI system design, data workflows, security measures, and MLOps practices against the EU AI Act. They can also guide your team through code reviews, refactoring, and improving documentation. Their "tech health" reports and due diligence checks give boards and investors confidence that your AI products can stand up to scrutiny, particularly in areas like safety, robustness, and data protection [1][2][11]. Plus, having oversight of AI-generated code ensures your systems are reliable, secure, and ready for conformity assessments.

Investing now in solid documentation, thorough testing, and good governance won’t just keep you on the right side of regulators - it could also set you apart in the market. Companies that can prove their AI systems are safe and reliable will have an edge with customers, partners, investors, and regulators alike. By blending strong internal processes with expert guidance, UK and European businesses can continue to innovate while staying on top of evolving certification standards.

FAQs

What happens if a business doesn’t comply with the EU AI Act?

Failing to adhere to the EU AI Act comes with hefty penalties. Organisations could be slapped with fines reaching up to €30,000,000 or 6% of their annual global turnover - whichever is higher. On top of that, AI systems deemed non-compliant or unsafe might face restrictions or outright bans within the EU.

This underscores just how crucial it is for businesses, especially those operating in or eyeing the European market, to ensure their AI systems align with the law's stringent requirements. Getting it wrong could cost more than just money - it could mean losing access to one of the world's largest markets.

What steps can businesses take to prepare for AI certification under the EU AI Act?

To gear up for AI certification under the EU AI Act, businesses need to zero in on three main priorities: carrying out thorough technical assessments, putting in place robust risk management practices, and maintaining complete transparency in how their AI systems are designed and operated.

Working with skilled, CTO-led teams can be a game-changer. These professionals bring the expertise needed to create scalable AI architectures that tick all the compliance boxes. They can guide businesses through the certification process, handle due diligence, and set clear technical benchmarks to align with the ever-changing regulatory landscape.

What is the role of notified bodies in AI certification in Europe?

Notified bodies are officially designated organisations tasked with checking if AI products align with European standards and regulations. Their job is to assess whether these products meet the necessary safety, performance, and legal requirements.

By providing certifications, these bodies give businesses a way to prove they’re meeting the rules. This not only makes entering the market easier but also helps build trust with users and partners throughout Europe.